고정 헤더 영역

상세 컨텐츠

본문

At Criteo, Performance is everything.The serialization formats considered:. Protocol buffers. Thrift. Avro. Json. XMLWe did the benchmarking using a specialized library:, and C#.net 4.5. The data modelBefore digging into the implementation details, let us see the Data Model.

Our data is similar to an Excel workbook. It has got many pages, and each page is a table.

In our case each table has got some keys indexing the rows. One difference with Excel though is that each cell may contain s more than just a single item. It could be a list of floating point values, a dictionary of floating point values, a dictionary of dictionaries, and so on.We represent data in a table like structure. Each column may have a different data type and every cell in a column has the same data type as shown in table below. The cell’s value are implemented as subclasses of a base class called IData. We have one implementation of IData for each type of data structure we want to put in cells. KeyColumn1Column2Column3Column4.StringdoubleDoubleDictionaryDictionaryStringdoubleDoubleDictionaryDictionaryStringdoubleDoubleDictionaryDictionaryStringdoubleDoubleDictionaryDictionaryTable 1 Example of the table like structure.In order to have fixed sample of data to serialize, We wrote a data generator that randomly generates the different possible values for each type of columns.

The XML StoryThe original implementation was in XML so it became the reference benchmark for the other formats. Implementation was easy using the standard.net runtime serialization, simply decorate the classes with the correct attributes and voila.

Figure 1 DataContract annotations for serializationThe interesting part is the “DataContract” and “DataMember” attributes which indicates to the serializer what members to serialize. The JSON StoryJson is supposed to be faster and light-weight than XML. The serialization is handled by the Newtonsoft library, easily available in C#. There is just one small glitch here, in order to be able to correctly serialize and deserialize such dynamic data, we had to set the type name handling to automatic. This resulted in json text with a type field.For example. The Protocol Buffer storyThis has a lot of hype, which kind of makes sense because binary formats are most of the time faster than text formats, also the data model for the messages could be generated in many languages from the protobuf IDL file. The drill here is to create the IDL, generate C# objects, write convertors and serialize!But wait, which library should we use?

We found at least three different nugets, two of them claimed to implement the same version of Protobuf V3.After much investigation, we realized that Google.Protobuf is provided by Google and had the best performance. Protobuf3 is compiled by an individual from the same source code but it is slower.There is more than one way to solve the problem with protobuf and we decided to try three different implementations and compare the performance.

First implementationThis implementation is referenced as protobuf-1 in our benchmarks. The design had to solve the problem of storing a polymorphic list. This had to be done using inheritance, and this explores different methods of implementing it. We compared them and chose to use the type identification field approach as it had a better performance.Let’s see the example.Here, each cell of the table would contain one object of DataColumnMessage, which would have one field filled with values and the rest of them are null values. Protobuf does not store null values for optional fields, so the file size should not change a lot. But still this meant 4 null values and if the number of fields increase, that would mean even higher number of null values.

Using Thrift For Serialization Delay List

Does that effect the performance? Keep reading for the comparison of results. Second ImplementationThis implementation is referenced as protobuf-2 in our benchmarks.

We knew that each column has the same data type, so we tried a column based design. Instead of creating one value for each cell, we decided to create one object per column. This object will store one field for the type of the objects stored, and a repeated value for each cell. Therefore drastically decreasing the number of null values and the number of field “type”.Let’s look at how the IDL would look like,We believed that this should improve the performance by quite a lot in comparison to the previous version. Third ImplementationThis implementation is referenced as protobuf-3 in our benchmarks.

We wanted to leverage the new “ ” keyword introduced in the protobuf version3 and benchmark its performance. This is the new specialized way of defining dictionaries so we were hoping for performance improvements. Our hypothesis was that we don’t need to allocate and copy data while converting to our business objects. Are we right?

We’ll see in the comparisons.The dictionary object description changes from a list of key/value pair to a map. Protobuf V3This generates code that directly uses the C# dictionary implementation. The Thrift StoryYou know the drill, create the IDL first, then generate the message objects, write the needed conversions and serialize.

Thrift has a much richer IDL, with a lot of things that do not exist in protobuf. In the test example, one big advantage we had was the availability of the “ ” keyword.

This meant that we can now specify rThe rest of the IDL is not that different from protobuf.Let’s look at the example.In our simple case, the Thrift IDL allows us to specify our map of list in a single line. Thrift IDL.We found Thrift mandates a stricter format where you declare classes before using them. It also does not support nested classes.

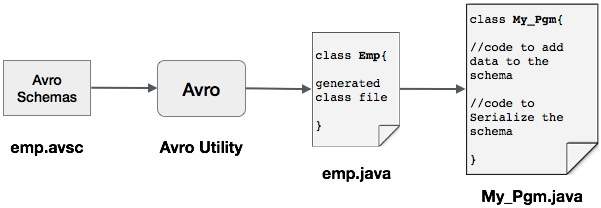

However it natively supports list and maps, which simplified the IDL file.Thrift syntax is far more expressive and clear. But is it the same for performance? To know more keep reading. The Avro storyApache Avro is a serialization format whose support in C# is officially provided by Microsoft. As with the other serialization systems, one can create a schema (in JSON) and generate C# classes from the schema.The example of Avro JSON Schema (excerpt):We found the JSON schema very verbose and redundant in comparison to the other serialization formats. It was a bit difficult to actually write and generate the classes. So we took a shortcut and generated the schema by using the DataContract annotations.

All we want is performance and no difficult Schema language could stop us from evaluating all the options.Note that there exists an IDL for Avro with a Java tool that generates the JSON schema from the IDL file, see: We didn’t evaluate it because we preferred to let the C# Avro library generate the schema from the annotated code.But, there was another blocker. It was the inconsistent support for dictionary-like structures. For instance, we found that the C# version correctly serializes a dictionary whose value type is a list. But it refuses to serialize a dictionary of dictionaries. In this case, it doesn’t throw any exception. Instead it will just silently fail to serialize anything.We also came across a bug opened at Microsoft’s github two years ago (still open), where a dictionary with more than 1024 keys throws an exception when serialized.

( )Given these constraints we had to serialize dictionaries as list of key and value pairs and create all intermediary classes with annotations. It contributed to make the scheme more complex. Is it now going to beat the other formats? Let’s find out.

ResultsWe split our benchmarks into two configurations:. Small objects, where the lists and dictionaries contains few items (less than 10). This configuration is used usually by a web-service exchanging small payloads.

Big objects, where we accept lists with many hundreds items. This configuration is representative of our Map/Reduce jobs.We measured the following metrics:. Serialization time. Deserialization time. Serialized file sizeIn our case the deserialization is more important than serialization because a single reducer deserializes data coming in from all mappers. This creates a bottle neck in the reducer.

Small Objects File Sizes. Table 2 Small objects serialized file sizes in Bytes. All binary formats have similar sizes except Thrift which is larger. 2 nd implementation of protobuf is the smallest among other protobuf implementations due to the optimization achieved with column based design.

3 rd implementation of protobuf is a bit bigger which implies that the map keyword in protobuf increases the file size by a little. Json is off course better than XML.

XML is the most verbose so the file size is comparatively the biggest.Performance. Table 3 Small objects serialization time in micro-secondsThrift and protobuf are on par. Avro is a clear loser. The different implementations of protobuf. 3 rd implementation of protobuf that uses the map is around 60% slower than the other protobuf implementations. Not recommended if you’re looking for performance but it inherently provides the uniqueness of the keys which is a tradeoff. 2 nd implementation is not optimal.

The column based design doesn’t show its full effect for small objects. Serialization is generally quicker than deserialization which makes sense when we consider the object allocation necessary. The numbers confirm that text formats (xml, json) are slower than binary formats. We would never recommend using Avro for handling small objects in C# for small objects.

Maybe in other languages the performance would be different. But, if you’re considering to develop micro services in C#, this would not be a wise choice.Large Objects File Size. Table 6 Large objects serialization time in milli-seconds. This time, Thrift is a clear winner in terms of performance with a serialization 2.5 times faster than the second best performing format and a deserialization more than 1.3 times faster. Avro, that was a clear disappointment for small objects, is quite fast. This version is not column based, and we can hope it would make a little faster. The different implementations of protobuf.

Column based 2 nd implementation of protobuf is the winner. The improvement is not huge, but the impact of this design kicks in when the number of columns starts to be very high. Serialization is generally quicker than deserialization which makes sense when we consider the object allocation necessary. Serializing XML is faster than Json. Json on the other hand is way faster.Final conclusionProtobuf and Thrift have similar performances, in terms of file sizes and serialization/deserialization time.

The slightly better performances of Thrift did not outweigh the easier and less risky integration of Protobuf as it was already in use in our systems, thus the final choice.Protobuf also has a better documentation, whereas Thrift lacks it. Luckily there was the missing guide that helped us implement Thrift quickly for benchmarking.Avro should not be used if your objects are small. But it looks interesting for its speed if you have very big objects and don’t have complex data structures as they are difficult to express. Avro tools also look more targeted at the Java world than cross-language development. The C# implementation’s bugs and limitations are quite frustrating.The data model we wanted to serialize was a bit peculiar and complex and then investigation is done using C# technologies. It could be quite interesting to do the same investigation in other programming languages.

The performance could be different for different data models and technologies.We also tried different implementations of protobuf to demonstrate that the performance can be improved by changing the design of the data model. The column based design to solve the real problem had a very high impact on the performance.Post written by:Afaque KhanSoftware Engineer, R&D – Engine – Data Science LabFrederic JardonSoftware Developer, R&D – Engine – Data Science Lab.

(disclaimer: I haven't used thrift/protobuf/capn-proto, this is based on my understanding form reading their documentation)I would say the biggest reason for something like cereal is ease of use. There's no schema to worry about or worrying about mapping your types into the serialization format. Some people want their data in a different format and cereal makes it fairly easy to write new serialization archive types. Cereal also comes with XML and JSON support, which a lot of people seem to like.cereal supports pretty much everything in the standard library already, so a user can very quickly give even complicated types serialization support.Writing serialization functions is a small amount of work, which I would say is easier than making a well defined schema.Something like capn-proto is better suited if you need to talk to other languages in a binary-like format, and currently I would say also much better for communicating over a network in a streaming fashion. There's a better response describing the key differences.

I've primarily used thrift but I presume that protobuf is very similar. Writing a custom serialization/deserialization format is not very difficult. The whole point of these is that you decouple the serialization of objects from the format they are serialized to.

That being said there are compromises you have to make (like no unsigned types) since this has to be language agnostic.However, at the point where you are encoding things in JSON which doesn't have unsigned integers, I'm not really seeing a whole lot of benefit.The schema management seems like a poor trade-off. Thrift/protobuf/etc schema are trivial to manage & don't suffer from the same problems as SQL schema.(I appreciate that you're just playing devil's advocate.). I'm unclear what distinction you're making between serialization & messaging.

While all of them (now) provide mechanisms to define RPC calls, serialization is still the fundamental underlying concept (the goal with RPC is to define a way for old & new clients to call an API). That's why all of them show up in serialization benchmarks. Is there less code to write? It seems like I have to do a lot of manual serialization & de-serialization instead of specifying the data structure & generating the serialization/deserialization automatically.

I also have to worry about versioning & how to handle added/removed data vs it being completely transparent. Efficiency is an ambiguous term in this field.

What is the criteria? Serialized byte size?

Objects serialized per second? Objects deserialized per second?. The distinction is less technical and more usage-oriented.Serialization: serializing a session context kept in memcached (to be accessible from various webservers).Messaging: the web browser tells the webserver to buy X items of ID Y.Serialization is symmetrical: you register information to access it at a later date; generally in the same process or a clone. On the other hand Messaging is about communicating between widely different processes, operating with different BOMs (and maybe even different languages), this in turns means that messaging is not tied to one particular representation of the business and (in general) each message defines its own representation requiring translation on both ends: Sender BOM - Message - Receiver BOM.Now, things get blurry because you can perfectly use messaging (thrift, protobuf.) instead of serialization because it's a super-set of the functionality. The cost, of course, is that this requires implementing the translation and has some performance overhead; on the other hand you get robust solutions for multiple versions handling, and thus backward compatibility and maybe forward compatibility.And things get blurrier because serialization framework also attempt to cover the backward/forward compatibility cases and multiple versions handling.So the two come from different requirements, but lean in the same direction.

Having used protobuf and cereal I can say that protobuf with it's separate schema language and.proto files AND an additional build step introduces additional complexity especially in trying to represent complex structures. Implementing protobuf in an existing project is also much more disruptive than the (often very short) serialize methods cereal provides.There is tremendous value in simple object serialization provided by cereal vs having to define separate schemas in separate files alongside your actual code.From a purely subjective standpoint protobuf looks like an ugly ass sandwich syntactically, and forces you to write goobly code to get it to run, it is extraordinarily invasive. The only reason I'd use it again is because it is well supported and has well defined interaction across languages. For single-language projects cereal is superior syntactically and work-flow wise. I took an existing library and was able to get the whole scene graph serialized with cereal without modifying any code except for the basic serialize functions which I added.